Vibe coding, AI and fast MVPs: When play gets serious

What happens when AI-generated code and creative developer joy meet the real-world demands of security, privacy, and sustainable solutions?

“I was just making something at night...”

New stories are constantly being shared in social media and developer forums:

“I built this app in an evening with Lovable”

“Look what I got into with some vibecoding and no-code!”

And we get it well. The technological development is enormous. With tools like Loveable, Copilot, Databutton and Replit, you can build working digital solutions in record time — even without a classic developer background. Creativity flourishes. The barrier to creating is lower than ever.

But right there -- in the transition from playful idea to actual solution -- it starts to get serious.

From “works” to “works safely”

Many of these fast AI-based solutions look impressive on the surface. They can have chat function, data collection, APIs and user accounts. It's all working.

But under the hood?

- How is personal data stored?

- Is there control over who has access?

- Is the data encrypted?

- Are there logs, error handling, and consent mechanisms?

- Where does the copyright lie and who owns the application?

Often the answer is: no, it hasn't been thought of yet. And that's understandable -- when the goal is to get something up and running quickly. The problem arises when the “prototype app” suddenly gets real users. Or when the MVP is actually going to launch.

And it's not just new entrepreneurs and curious developers who are walking into this trap. Even the biggest tech companies have found themselves in dire situations because basic safety and liability were not followed up on time.

Real Examples: Loopholes and Scandals

There is no shortage of stories in which haste and lack of security have had consequences:

- Meta (Instagram/Facebook) recently received a €91 million fine for storing users' passwords in plain text.

- DeepSeek AI had a database of API keys, logs, and metadata that, by mistake, was openly accessible.

- Replica AI has received criticism for unclear privacy management and data collection that users did not understand the reach of.

- In Norway we got Grindr a fine of NOK 65 million for sharing users' GPS and ad IDs with third parties without valid consent.

The common denominator? The apps worked. The technology worked. However, data security, control, transparency and compliance with GDPR were not adequately safeguarded.

Where do we come into the picture?

At Increo, we root for vibecoding and rapid innovation. We love developer joy, experimentation and AI as tools to build new. It's healthy for the barriers to be lower.

But we also know that there's a big difference between making something -- and launching something that can withstand reality.

This is where we have something to contribute:

- We build solutions that can scale -- whether you started with an AI-generated MVP or an idea on the napkin.

- We think about security and GDPR from day one, helping you choose technologies, architectures, and integrations that are robust over time.

- We work with you, not only as a technical supplier, but as an advisor and partner.

You don't have to choose between speed and quality. But you need someone who can balance it right.

When vibecoding + professionals = true value

Let's be clear: there's nothing wrong with building a prototype in one evening. On the contrary -- it's awesome!

But if your app:

- collect or store user data,

- integrate with external systems,

- to be used by real people in real situations...

It needs more than a good vibe.

At Increo, we constantly meet entrepreneurs and teams who have achieved a lot — and need help to take the step forward. To do things right. To ensure that the solution not only works, but is actually safe, legal and maintainable.

At Increo, we constantly meet entrepreneurs and teams who have achieved a lot — and need help to take the step forward. To do things right. To ensure that the solution not only works, but is actually safe, legal and maintainable.

How we work — in practice

Many of the customers we talk to today want greater freedom to develop themselves -- and see AI and no-code as the way to get there. We believe in this development and offer models in which we combine the customer's own tools with our security and professional expertise.

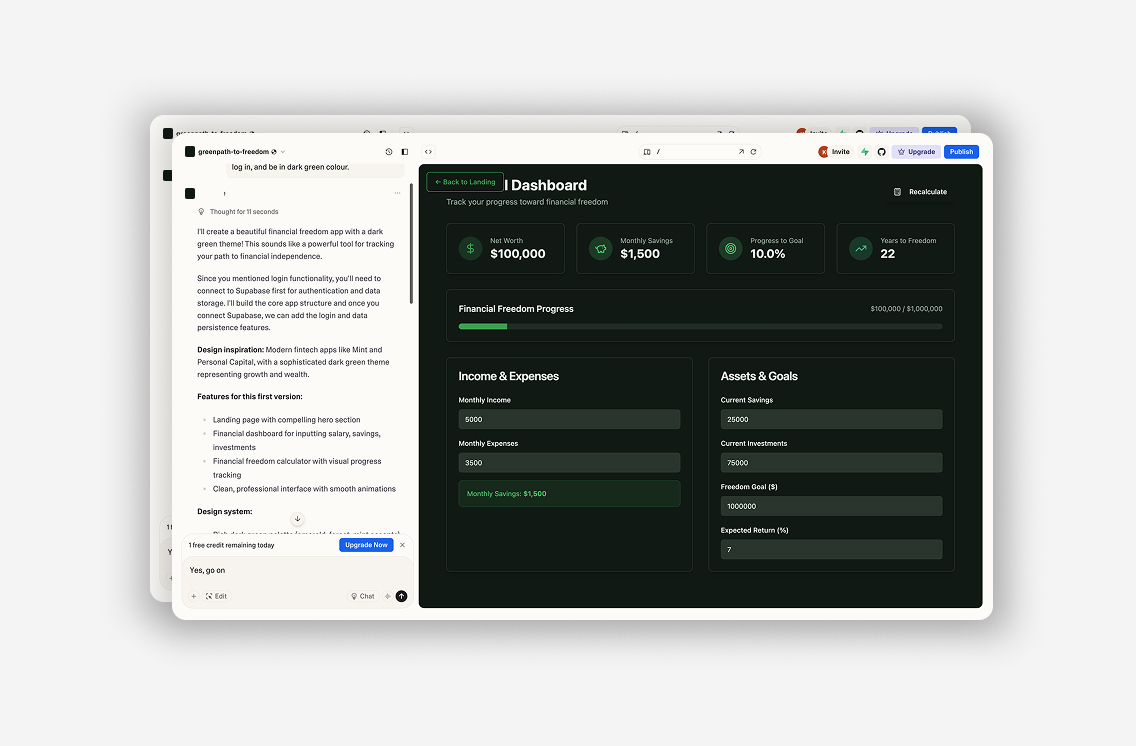

A good example is the platform Lovable.dev. It makes it possible to build functional solutions quickly, and at the same time export the code (Next.js) for further processing and quality assurance.

This is what a collaboration might look like:

- Customer builds first version with Lovable.dev — preferably based on existing styleguide

- We contribute adjustments, design customization and evaluation of the Next.js code

- Together we consider integration with systems such as Sanity, and handle any technical needs

- We help with publication and further advice, and find out if this is a form of work that suits the customer in the long term

This model provides both freedom of action and control — and allows you to scale further without losing track, structure or compliance.

Are you there now?

Maybe you already have an AI-generated solution you're proud of. Maybe you've built something yourself — or with a low-code tool — and feel that you need a steady partner for the next phase.

We'd be happy to have a chat.

Either to assess the architecture, look at the security, discuss computing, or help you rig a real solution based on what you've already built.

Do you want to build with speed — but without compromising on safety, responsibility and quality? Then we should talk.

Email us, call, or drop by — we'll be happy to help you take the next step.